In BI Projects, sooner or later you will face the challenge to load and process vast amounts of data via SSIS packages, which would usually require numerous days or even weeks in order to be accomplished. The first idea popping into mind will most certainly be to split the to-be-processed data into more or less equal heaps and to implement a parallel execution of the SSIS processing package. At least this was my first idea when I encountered the task to load several million files with a total of nearly 35 billion records into a database. Following I will describe various possibilities of parallel SSIS execution and the solution I finally found for the task at hand.

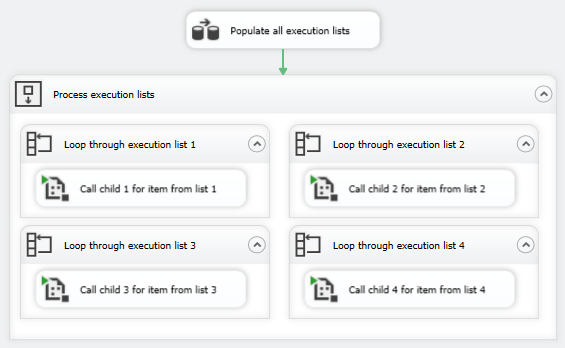

The server I worked on had a total of 20 cores available solely for this task. So In my first design the first thing I did was to prepare a processing package in order to receive the destination table name and the file name via parameter – to prevent deadlocks, I created 20 identical destination tables which would then be populate by separate processing package instances. Next – sticking to SSIS standard components – I implemented the master package which featured 20 variables in order to store the to-be-processed lists. Afterwards I fetched a list of all files which had to be processed, split it into quite equal heaps (considering the number of files as much as the size of each file) and populated my variables accordingly. Finally I executed the processing package in 20 parallel execute package tasks. The result looked something like the following:

This solution worked, but the performance wasn’t even close to what I expected. Checking the performance and resource monitor I noticed that the CPU utilization rarely hit the 50% mark and that most of the available CPU resource was idle. The main reason was most certainly the overhead of starting a package execution, process the file within the package, finish the execution and start the next execution.

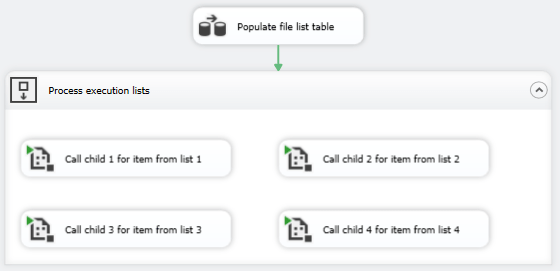

This lead to the second design: I created a table in a database, which stored the file list and its distribution into equal heaps. Furthermore I modified the processing package’s parameters in order to not receive the file name anymore but a list ID from the newly created table. Furthermore the processing package was modified in order to read all corresponding filenames from the table and perform the loading of the files accordingly. The design of the master package changed as follows:

With this done, the overhead of creating one package execution per file was reduced to one execution per list of files. Anyways, the performance was slightly improved but still far from acceptable.

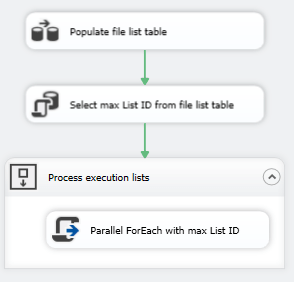

Leaving the path of standard SSIS components, I decided to modify my master package in order to create the executions via a C# script task. Luckily, starting with .NET 4.0 C# natively supports parallelism with Parallel.For() and Parallel.ForEach() Loops. Basically this does what I already tried to implement with SSIS standard components: Create numerous parallel instances of the same package. Reusing my file list table and the processing package from my previous version, the master package of this version looked something like the following:

This gave the performance a really good boost. After all, it reached a CPU utilization of round about 95%. But are we done? Unfortunately not yet: As it has shown, the 95% CPU utilization could not be kept permanently. After a certain time, the performance crashed back down to 50% or less and wouldn’t rise again. The reason for this seemingly was connected to the Parallel.ForEach() loop, which experienced certain problems when creating parallel executions of SSIS packages – even with a forced delay of half a second between each execution. This was as well shown in the task Manager: the number of running SSIS instances went down to 10 or even less.

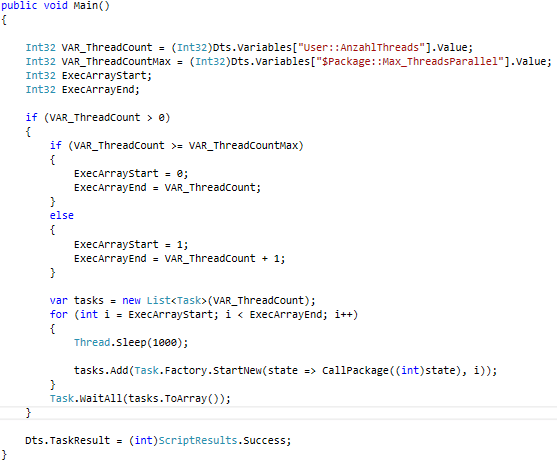

This lead to my final design: I slightly modified the C# script task component from the previous version. Instead of the Parallel.ForEach() loop I used a C# task list in order to handle the parallel execution of the processing package. Following the essential section of the C# script component:

This change resulted in a steady count of 21 running SSIS instances (1 instance of the master package + 20 instances of the processing package) and a permanent CPU utilization of 98% – and a performance which finally allowed the loading of the mentioned amount of data.

Kommentare (0)