With this blog post i want to continue the series on explaining Data Vault Loads. Today i want to describe the load pattern for Data Vault Satellite. In a Data Vault model satellites are used to store descriptive attributes associated with Hubs or Links with tracking of all historical values. Therefore the primary key of a satellite is a two part key build by the Surrogate Key of the Hub/Link, which it is attached to, extended by a datetime-stamp. With these two mandantory fields tracking of historical data is possible.

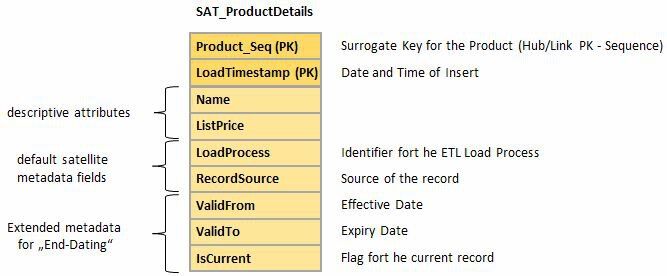

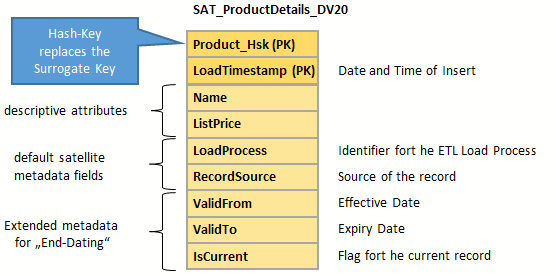

The default Satellite has pros and cons. On the one hand the load of this default satellite is very simple because it works „Insert-Only“ – similar to Hub Loads. On the other hand the further work with this default satellite is very complex. To reduce complexity in general and to achieve an improve of speed, reusability, parallelism and other reasons i recommend to extend the default satellite with additional fields to prepare it for the use with „End-Dating“. For this purpose two metadata-fields for effective date/time (ValidFrom) and expiry date/time (ValidTo) are added. Because use cases often require current information without history, an additional field is added to allow an easy identification or current data. Therefore in this post i focus on loading satellites with „End-Dating“.

Data Vault Satellite Load 1.0

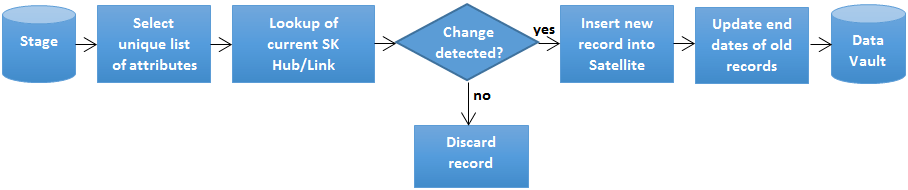

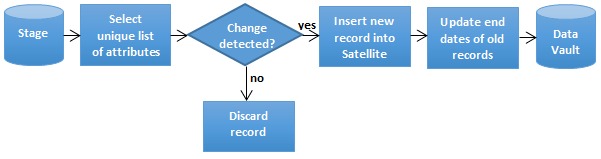

The function of a satellite load is to select the unique list of attributes, lookup the Surrogate Key of the attached Hub/Link and check for changes against the current record in the satellite. If no change is detected, discard the record from the pipeline. If a change is deteced, insert the new record into the satellite as current record and update the previous records end date.

Example

To explain satellite loads i am using a well-known example from the Adventure Works 2012 Data Base. The example load is supposed to load product information into the Data Vault Model. The destination table SAT_ProductDetails has the following structure:

The following T-SQL and SSIS Implementations will illustrate the concept of Data Vault Satellite 1.0 Loads:

T-SQL Implementation

In this example a stored procedure Load_SAT_ProductDetails represents the Satellite Load Pattern. Basis of this approach is the SQL Merge command. The Parameter @LoadProcess has to be set by the ETL Process that executes the procedure. It can be generated by any tool or scheduler that is running your ETL-Workflow. If you use a SSIS Package to execute your stored procedure i recommend to use the ServerExecutionID within SSIS.

First some variables are declared for values that are used several times. The variable @DefaultValidFrom stores the initial Effective Date for the first occurance of a record. In this example January, 1st 1900 is sufficient to handle all use cases for the adventure works example. Of course this can be adjusted if it is required.

For the selection of unique attributes from stage, the query uses a distinct statement. This works for a non persistent staging area where is ensured, that a change can only occure once per record. Otherwise the merge statement returns an error, because it cannot update the same row of the target table multiple times. In this case you have to extend the query to meet this requirement. This can be done in many different ways, which I will not describe in detail here because it is beyond the scope of this post.

The unique attributes from source are looked up by the Hub/Link-Key Product_seq and IsCurrent=1 to lookup against the current record. For existing records a change detection based on a comparison of each attribute is used. In this example i assume all attributes never can become NULL. If NULL-Values can occure, the compare needs to be extended to handle them.

Alternatively the comparison can be done by CDC-Hashes.

For detected changes the old record in the target table will be outdated. A current record is inserted by the overlying insert-statement, which uses the MergeOutput as source. New records are added.

For purpose of integration wihtin a workflow the stored procedure is extended with an errorhandling, which returns the result of the execution. This resultset can be customized to deliver further information about the execution, like rowcounts for the several operations within the merge statement.

-

CREATE PROCEDURE DataVault.Load_SAT_ProductDetails

-

@LoadProcess BIGINT

-

As

-

-

DECLARE @RecordSource nvarchar(100)

-

DECLARE @DefaultValidFrom datetime2(0) –use datetime2(0) to remove milliseconds

-

Declare @DefaultValidTo datetime2(0)

-

DECLARE @LoadDateTime datetime2(0)

-

-

SET @RecordSource = N’AdventureWorks2012.Product.Product‘

-

SET @DefaultValidFrom = ‚1900-01-01‘

-

SET @DefaultValidTo = ‚9999-12-31‘

-

SET @LoadDateTime = GETDATE()

-

-

BEGIN TRY

-

Begin Transaction

-

-

–Insert new current records for changed records

-

INSERT INTO DataVault.SAT_ProductDetails

-

(

-

Product_Seq,LoadTimestamp,Name,ListPrice, LoadProcess, RecordSource, ValidFrom,ValidTo, IsCurrent

-

)

-

SELECT

-

Product_Seq,

-

@LoadDateTime, –LoadDatetimeStamp

-

Name,

-

ListPrice,

-

@LoadProcess as LoadProcess,

-

@RecordSource as RecordSource,

-

@LoadDateTime, –Actual DateTimeStamp

-

@DefaultValidTo, –Default Expiry DateTimestamp

-

1 –IsCurrent Flag

-

FROM

-

(

-

MERGE DataVault.SAT_ProductDetails AS Target –Target: Satellite

-

USING

-

(

-

— Query distinct set of attributes from source (stage)

-

— includes lookup of business key by left outer join referenced hub/link

-

SELECT distinct

-

hub.Product_Seq,

-

stage.Name,

-

stage.ListPrice

-

FROM stage.Product_Product_AdventureWorks2012 as stage

-

LEFT OUTER JOIN DataVault.Product_Hub as hub on stage.ProductNumber=hub.Product_BK

-

WHERE hub.Product_Seq is not null

-

) AS Source

-

ON Target.Product_Seq = Source.Product_Seq –Identify Columns by Hub/Link Surrogate Key

-

AND Target.IsCurrent = 1 –and only merge against current records in the target

-

–when record already exists in satellite and an attribute value changed

-

WHEN MATCHED AND

-

(

-

Target.Name <> Source.Name

-

OR Target.ListPrice <> Source.ListPrice

-

)

-

— then outdate the existing record

-

THEN UPDATE SET

-

IsCurrent = 0,

-

ValidTo = @LoadDateTime

-

— when record not exists in satellite, insert the new record

-

WHEN NOT MATCHED BY TARGET

-

THEN INSERT

-

(

-

Product_Seq, LoadTimestamp, Name, ListPrice, LoadProcess, RecordSource, ValidFrom, ValidTo, IsCurrent

-

)

-

VALUES

-

(

-

Source.Product_Seq,

-

@LoadDateTime,

-

Source.Name,

-

Source.ListPrice,

-

@LoadProcess,

-

@RecordSource,

-

@DefaultValidFrom, –Default Effective DateTimeStamp

-

@DefaultValidTo, –Default Expiry DateTimeStamp

-

1 –IsCurrent Flag

-

)

-

— Output changed records

-

OUTPUT

-

$action AS Action

-

,Source.*

-

) AS MergeOutput

-

WHERE MergeOutput.Action = ‚UPDATE‘

-

AND Product_Seq IS NOT NULL;

-

-

Commit

-

SELECT

-

‚Success‘ as ExecutionResult

-

RETURN;

-

END TRY

-

-

BEGIN CATCH

-

-

IF @@TRANCOUNT > 0

-

ROLLBACK

-

-

SELECT

-

‚Failure‘ as ExecutionResult,

-

ERROR_MESSAGE() AS ErrorMessage;

-

RETURN;

-

END CATCH

-

-

GO

SQL Server Integration Services – Implementation

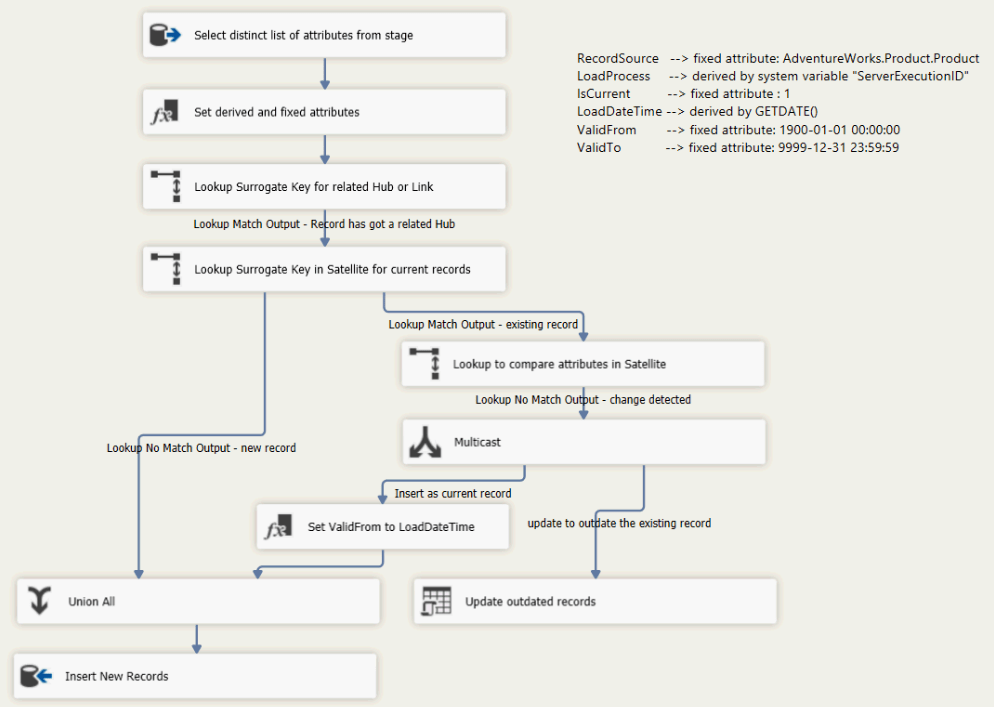

When we model Data Warehouse Loads, we want to use an ETL Tool like Microsoft SQL Server Integration Services. This sample shows how the for pattern loading Data Vault Satellites 1.0 can be implemented in Integration Services.

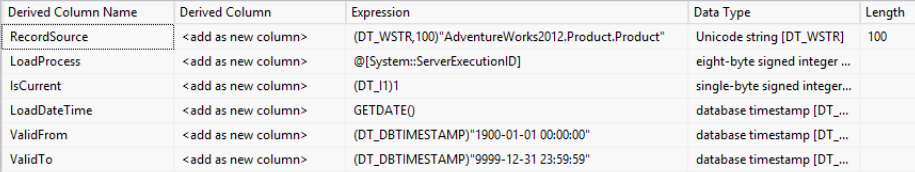

In this example the whole load process is implemented wihtin one data flow task. First a unique list of attributes is selected from the stage by the following query:

-

SELECT DISTINCT

-

ProductNumber as Product_BK,

-

Name,

-

ListPrice

-

FROM stage.Product_Product_AdventureWorks2012

This data set is extended by the following derived and fixed attributes:

For Data Vault Satellite Loads 1.0 we need to lookup the current Surrogate Key for the related Hub/Link. This is done in the fist lookup. Non matching rows are discarded by the lookup, because we can not load a Satellite wihtout having a related Hub or Link. The Surrogate Key (Product_Seq) is added to the matching rows. For the matching rows the second lookup checks if the record already exists in the satellite. If it not exists in the Satellite a new record can be written. For the existing records in the satellite the third lookup compares all satellite attributes. If no match is found, that means there is noch change and the record can be discarded. If the lookup can not find a match, an attribute has changed. In this case we need two operations to perform the change. First we need to insert a new current record with the actual LoadDateTime as effective date. For the old record we have to perform an update and set the expiry date to the actual LoadDateTime and IsCurrent to „0“ (zero).

In this example i show a very simple version of an SSIS implementation. It is recommended to extend this pattern to your own requirements by adding additional functionalities like integration of metadata or logging.

Data Vault Satellite Load 2.0

The main difference between Satellite Loads in Data Vault 1.0 and Data Vault 2.0 ist, that lookup of a Surrogate Key for the referencing Hub/Link is no longer necessary. This is usually a bottleneck and represents a synchronization point during ETL-processing. With Data Vault 2.0 the Satellite can be loaded without any lookup and without waiting for the referencing Hub/Link to be processed.

Example

The difference in modeling between Data Vault 1.0 and Data Vault 2.0 is the replacement of sequences by hash keys. As opposed to sequences, a hash-key is not generated by the data warehouse regardless of the underlying data. It is derived by the business key and computed when entering the staging-area of the data warehouse.

The following T-SQL and SSIS Implementations will illustrate the concept of Data Vault Satellite 2.0 Loads:

T-SQL Implementation

In this example a stored procedure Load_SAT_ProductDetails_DV20 represents the Satellite Load Pattern for Data Vault 2.0. It works similar to the DV 1.0 stored procedure. The Sequences Product_Seq are replaced by hash keys. The main difference is the Using within the Merge-statement, which only selects the input data from the staging-area without performing a lookup to its referenced Hub/Link.

-

CREATE PROCEDURE DataVault.Load_SAT_ProductDetails_DV20

-

@LoadProcess BIGINT

-

As

-

-

DECLARE @RecordSource nvarchar(100)

-

DECLARE @DefaultValidFrom datetime2(0) –use datetime2(0) to remove milliseconds

-

Declare @DefaultValidTo datetime2(0)

-

DECLARE @LoadDateTime datetime2(0)

-

-

SET @RecordSource = N’AdventureWorks2012.Product.Product‘

-

SET @DefaultValidFrom = ‚1900-01-01‘

-

SET @DefaultValidTo = ‚9999-12-31‘

-

SET @LoadDateTime = GETDATE()

-

-

BEGIN TRY

-

Begin Transaction

-

-

–Insert new current records for changed records

-

INSERT INTO DataVault.SAT_ProductDetails_DV20

-

(

-

Product_Hsk,LoadTimestamp,Name,ListPrice, LoadProcess, RecordSource, ValidFrom,ValidTo, IsCurrent

-

)

-

SELECT

-

Product_Hsk, –Hash Key

-

@LoadDateTime, –LoadDatetimeStamp

-

Name,

-

ListPrice,

-

@LoadProcess as LoadProcess,

-

@RecordSource as RecordSource,

-

@LoadDateTime, –Actual DateTimeStamp

-

@DefaultValidTo, –Default Expiry DateTimestamp

-

1 –IsCurrent Flag

-

FROM

-

(

-

MERGE DataVault.SAT_ProductDetails_DV20 AS Target –Target: Satellite

-

USING

-

(

-

— Query distinct set of attributes from source (stage)

-

SELECT distinct

-

stage.Product_Hsk,

-

stage.Name,

-

stage.ListPrice

-

FROM stage.Product_Product_AdventureWorks2012_DV20 as stage

-

) AS Source

-

ON Target.Product_Hsk = Source.Product_Hsk –Identify Columns by Hub/Link Hash Key

-

AND Target.IsCurrent = 1 –and only merge against current records in the target

-

–when record already exists in satellite and an attribute value changed

-

WHEN MATCHED AND

-

(

-

Target.Name <> Source.Name

-

OR Target.ListPrice <> Source.ListPrice

-

)

-

— then outdate the existing record

-

THEN UPDATE SET

-

IsCurrent = 0,

-

ValidTo = @LoadDateTime

-

— when record not exists in satellite, insert the new record

-

WHEN NOT MATCHED BY TARGET

-

THEN INSERT

-

(

-

Product_Hsk, LoadTimestamp, Name, ListPrice, LoadProcess, RecordSource, ValidFrom, ValidTo, IsCurrent

-

)

-

VALUES

-

(

-

Source.Product_Hsk,

-

@LoadDateTime,

-

Source.Name,

-

Source.ListPrice,

-

@LoadProcess,

-

@RecordSource,

-

@DefaultValidFrom, –Default Effective DateTimeStamp

-

@DefaultValidTo, –Default Expiry DateTimeStamp

-

1 –IsCurrent Flag

-

)

-

— Output changed records

-

OUTPUT

-

$action AS Action

-

,Source.*

-

) AS MergeOutput

-

WHERE MergeOutput.Action = ‚UPDATE‘

-

AND Product_Hsk IS NOT NULL;

-

-

Commit

-

SELECT

-

‚Success‘ as ExecutionResult

-

RETURN;

-

END TRY

-

-

BEGIN CATCH

-

-

IF @@TRANCOUNT > 0

-

ROLLBACK

-

-

SELECT

-

‚Failure‘ as ExecutionResult,

-

ERROR_MESSAGE() AS ErrorMessage;

-

RETURN;

-

END CATCH

-

-

GO

SQL Server Integration Services – Implementation

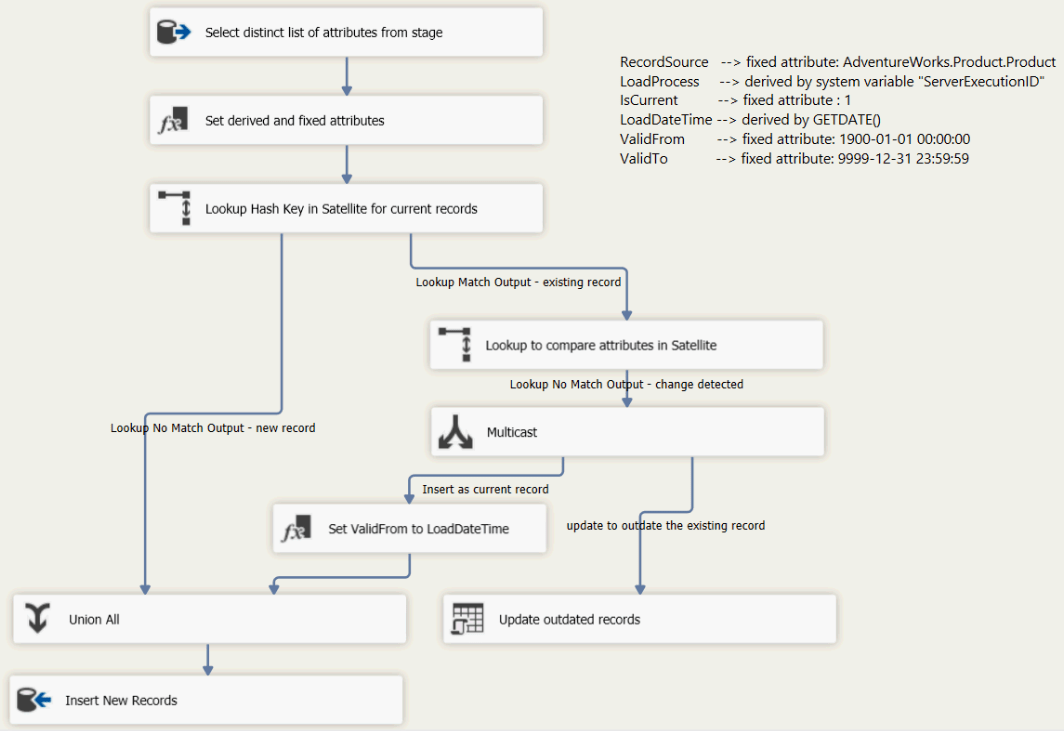

This sample shows how the for pattern loading Data Vault Satellites 2.0 can be implemented in Integration Services.

It is similar to the loading pattern for a Data Vault Satellite 1.0. The main difference is, that in this implementation we only need two lookups. We do not have to lookup a Surrogate Key of the related Hub or Link table. For the remaining two lookups the Hash-Key (Product_Hsk) delivered by the source (stage) is used as lookup column. Again, a simple version of the implementation has been presented, which can be extended individually.

Conclusion

The shown implementations are examples to explain how a Satellite Load works. Each individual project will require individual implementations of these patterns.

A Satellite Load is a simple pattern, which can be easily repeated for every entity in the data model. However, it is not recommend to repeat this manually. To take the most advantage of the Data Vault characteristics it is highly recommend to generate the Data Vault Satellite Loads to achieve a high degree of scalability and procutivity.

Kommentare (0)